Fortifying Cloud Vaults: A Guide to Securing Cloud Storage – Part1

In today’s digital age, organisations and individuals increasingly rely on cloud storage services to store and oversee their data. Whereas the cloud offers numerous benefits, such as scalability and accessibility, it also poses new modern-age multi cloud security challenges. In this blog post, we’ll explore a variety of strategies and best practices for securing your data in the cloud, guaranteeing that it remains protected from unauthorised access, data breaches and other security threats.

In this blog, we will mainly focus on Amazon Web Services (AWS) S3, powered by Amazon, and Microsoft’s Azure Blob Storage. We’ll dig deeper into the complexities of securing data stored in these cloud storage services, exploring encryption features, access control mechanisms, data integrity measures, and compliance offerings. Through this discussion, we aim to provide insights into how organisations can effectively protect their data assets in the cloud, leveraging security features and measures best practices provided by AWS S3 and Azure Blob Storage.

Before delving into AWS S3, Azure Blob Storage and GCP Bucket, it’s crucial to understand the fundamental aspect of encryption, both at rest and in transit.

In this multi-part blog series, we will explore the key features, security practices, and best practices of cloud storage services provided by these industry giants. In Part 1, we’ll delve into AWS’s Simple Storage Service (S3), examining its robust storage capabilities and security features. In Part 2, we’ll shift our focus to Microsoft Azure’s Blob Storage, exploring its scalability, integration options, and compliance offerings. Finally, in Part 3, we’ll uncover Google Cloud Platform’s Cloud Storage, highlighting its innovative storage solutions and data management capabilities.

Encryption at Rest and in Transit:

Encryption serves as a cornerstone in data security, ensuring that sensitive information remains protected from unauthorised access, whether it’s stored or transmitted. Here’s why it’s imperative:

- Protecting Data in Transit: When data travels between a client and a server, it’s susceptible to interception by malicious actors. Encrypting data in transit ensures that even if intercepted, it remains indecipherable to unauthorised entities. This is particularly vital for securing communications over public networks like the internet.

- Securing Data at Rest: Data stored in databases or cloud repositories is equally vulnerable to breaches. Encrypting data at rest ensures that even if unauthorised individuals gain access to the storage infrastructure, they won’t be able to decipher the encrypted data without the appropriate decryption keys.

Amazon S3 (Simple Storage Service):

Amazon S3 is a scalable object storage service provided by Amazon Web Services (AWS). It allows you to store and retrieve any amount of data, ranging from small files to large datasets, over the internet. Here’s an overview of Amazon S3’s key features and capabilities:

- Scalability & Availability: Highly scalable and available storage solution designed to handle virtually unlimited amounts of data with low latency.

- Durability: High durability is ensured by replicating data across multiple storage nodes within a data centre and across multiple data centres within a region.

- Security: Robust security features, including encryption at rest and in transit, access control policies, and integration with AWS IAM for granular access control.

S3 Bucket Default Encryption

Risk Level: High

When handling sensitive or crucial data, it’s highly advised to activate encryption at rest to safeguard your S3 data from unauthorised access or attacks. Encryption at rest can be applied at both the bucket level (S3 Default Encryption) and object level (Server-Side Encryption). S3 Default Encryption allows you to establish the default encryption settings for an S3 bucket. Once enabled for a bucket, all new objects uploaded to that bucket will be encrypted automatically.

How can we check the S3 Bucket holding sensitive data is Encrypted??

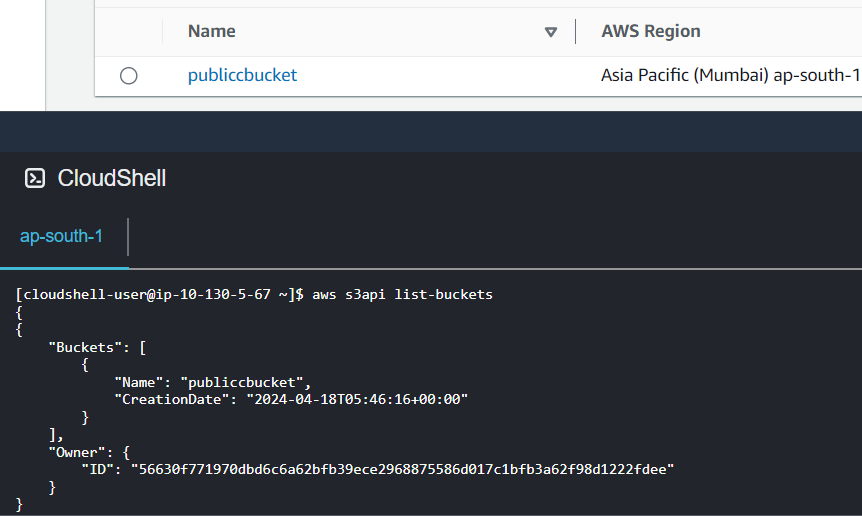

First retrieve a list of all Amazon S3 buckets present in your AWS cloud account.

aws s3api list-buckets

--query 'Buckets[*].Name'

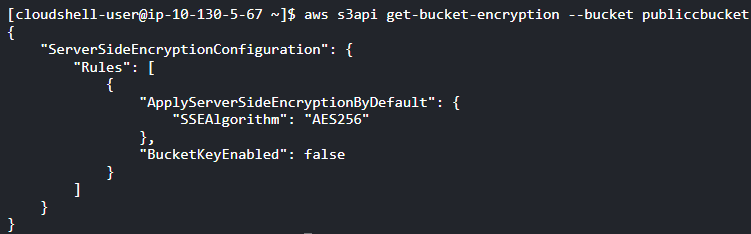

Execute the “get-bucket-encryption” command with the identifier parameter set to the name of your Amazon S3 bucket. This command will provide details about the configuration of the S3 Default Encryption feature available for the specified bucket.

aws s3api get-bucket-encryption

--bucket <Bucket_Name>

If the output of the “get-bucket-encryption” command displays the error message “ServerSideEncryptionConfigurationNotFoundError”, it indicates that the S3 Default Encryption is not enabled for the verified bucket.

How to make sure Encryption is enabled for the S3 Bucket ??

Leading cloud storage services like AWS S3 offer robust encryption features to enhance data security:

- AWS S3’s Server-Side Encryption (SSE): With SSE, AWS automatically encrypts data stored in S3 using strong encryption algorithms. It offers three modes: SSE-S3, SSE-KMS, and SSE-C, catering to different encryption key management needs. SSE ensures that data is encrypted before it’s written to disk and decrypted when accessed, providing end-to-end encryption for stored data.

To enable S3 Default Encryption for your existing Amazon S3 buckets, perform the following operations:

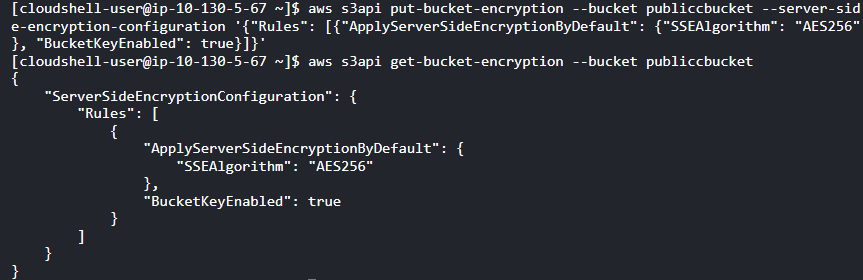

- Method-1: Enable Default Encryption using the Amazon S3 key (SSE-S3): Execute the “put-bucket-encryption” command to activate S3 Default Encryption for the designated Amazon S3 bucket. Utilise Server-Side Encryption with Amazon S3-Managed Keys (SSE-S3) for encryption.

aws s3api put-bucket-encryption

--bucket <Bucket_Name>

--server-side-encryption configuration '{

"Rules": [

{

"ApplyServerSideEncryptionByDefault": {

"SSEAlgorithm": "AES256"

},

"BucketKeyEnabled": true

}

]

}'

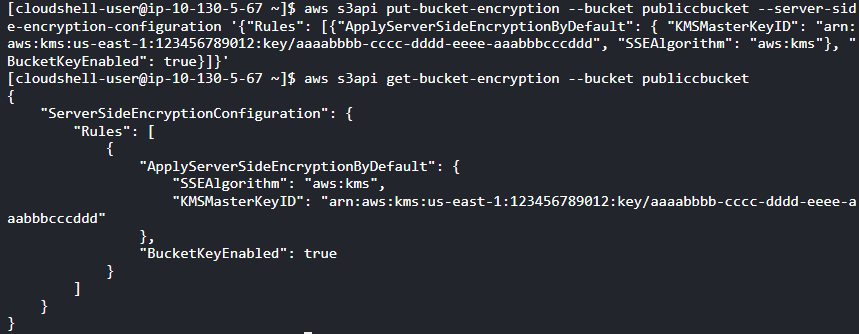

- Method-2: Enable Default Encryption using the Amazon KMS managed key (SSE-KMS): Use the “put-bucket-encryption” command to enable S3 Default Encryption for the specified Amazon S3 bucket, employing Server-Side Encryption with Amazon S3-Managed Keys (SSE-S3).

aws s3api put-bucket-encryption

--bucket <Bucket_Name>

--server-side-encryption-configuration '{

"Rules": [

{

"ApplyServerSideEncryptionByDefault": {

"KMSMasterKeyID": "arn:aws:kms:us-east-1:123456789012:key/aaaabbbb-cccc-dddd-eeee-aaabbbcccddd",

"SSEAlgorithm": "aws:kms"

}

}

]

}'

S3 Bucket Public ‘FULL_CONTROL’ Access

Risk: Very High (Take Actions Immediately)

To avoid unauthorised access, it’s crucial to ensure that your Amazon S3 buckets do not grant FULL_CONTROL access to anonymous users, which means public access. Publicly accessible S3 buckets permit everyone to list (Read), upload/delete (write), view (Read), and edit (Write) object permissions. It is strongly recommended granting these permissions to the “Everyone (public access)” entity in production environments due to the associated high risk.

Granting public FULL_CONTROL access to your Amazon S3 buckets enables anyone on the Internet to freely view, upload, modify, and delete S3 objects without any limitations. This exposure of S3 buckets to the public Internet poses significant risks, including potential data leaks and loss.

Amazon Simple Storage Service (S3) offers granular control over access permissions at the object level through a combination of Access Control Lists (ACLs) and Bucket Policies. Here’s how it works:

Access Control Lists (ACLs): ACLs are attached directly to individual objects within an S3 bucket. They define which AWS accounts or groups can perform specific actions (read, write, delete) on the object. You can specify separate ACLs for different objects within the same bucket.

- Read Permission: Grants the ability to view the contents of an object.

- Write Permission: Grants the ability to upload new versions of the object or modify its contents.

- Delete Permission: Grants the ability to remove the object from the bucket.

Bucket Policies: In addition to ACLs, S3 allows you to define bucket-wide policies using JSON-based syntax. These policies can be used to grant or deny permissions at a more granular level, including access to specific objects or groups of objects based on various conditions such as IP address, user agent, or request source.

- Example Policy: You could create a policy that allows users within your organisation to read and write objects within a certain prefix (folder) in the bucket, but deny them permission to delete those objects.

How to check the S3 Bucket is publicly accessible ??

To determine if your Amazon S3 buckets are exposed to the Internet, you can perform the following operations:

Check Bucket Policies and Access Control Lists (ACLs):

- Review the bucket policies and ACLs associated with your S3 buckets to verify if there are any configurations allowing public access. Look for statements granting permissions to the “Everyone” or “AllUsers” entity.

To retrieve a list of all Amazon S3 buckets present in your AWS cloud account.

aws s3api list-buckets

--query 'Buckets[*].Name'

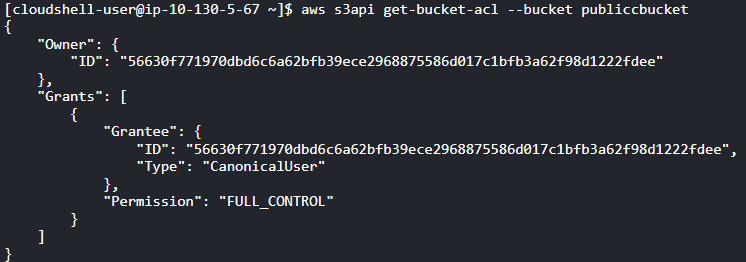

To describe the Access Control List (ACL) configuration set

aws s3api get-bucket-acl

--bucket <Bucket_Name>

If the output of the “get-bucket-acl” command displays “FULL_CONTROL” for the “Permission” attribute, as demonstrated in the example above, it indicates that the chosen Amazon S3 bucket is publicly accessible on the Internet. Consequently, the bucket’s ACL configuration is insecure and does not meet compliance standards.

How can you modify the ACL to limit access ??

To restrict public FULL_CONTROL access to your Amazon S3 buckets using Access Control Lists (ACLs), follow these steps:

Execute the put-bucket-acl command using the name of your Amazon S3 bucket as the identifier parameter. This command will remove the permissions set for the Everyone (public access) grantee, thereby denying public FULL_CONTROL access to the specified S3 bucket.

aws s3api put-bucket-acl

--bucket <Bucket_Name>

--acl private

S3 Cross-account Access

Risk Level: High

To safeguard against unauthorised cross-account access, configure all your Amazon S3 buckets to permit access exclusively to trusted AWS accounts. Set up the rule and specify the identifiers of the trusted AWS accounts, which should be provided as a comma-separated list of valid AWS account IDs and/or AWS account ARNs.

Enabling untrusted cross-account access to your Amazon S3 buckets through bucket policies can result in unauthorised actions, including viewing, uploading, modifying, or deleting S3 objects.

How to check the S3 bucket allows cross-account access ??

To identify Amazon S3 buckets that permit unknown cross-account access, take the following steps:

Execute the “list-buckets” command with custom query filters to display the names of all Amazon S3 buckets accessible in your AWS cloud account.

aws s3api list-buckets

--query 'Buckets[*].Name'

Execute the “get-bucket-policy” command, providing the name of the Amazon S3 bucket you wish to examine as the identifier parameter. This command will describe the bucket policy attached to the selected S3 bucket in JSON format.

aws s3api get-bucket-policy

--bucket <Bucket_Name>

--query Policy

--output text

If the policy document retrieved from the “get-bucket-policy” command output to locate the AWS account ID and/or AWS account ARN specified as value(s) for the “Principal” element in combination with “Effect”: “Allow”.

How to update bucket policy and remove untrusted cross-account access ??

Revise the policy document attached to the specified Amazon S3 bucket. Replace the untrusted AWS account ID or AWS account ARN within the “Principal” element value with the account ID or ARN of the trusted AWS entity, as specified in the conformity rule settings. Save the updated policy document in JSON format.

Sample Policy:

{

"Id": "cc-s3-prod-access-policy",

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::123412341234:root"

},

"Action": "s3:*",

"Resource": "arn:aws:s3:::<Bucket_Name>*"

}

]

}

Execute the “put-bucket-policy” command on OSX/Linux/UNIX to substitute the non-compliant bucket policy attached to the chosen Amazon S3 bucket with the policy modified in the previous step.

aws s3api put-bucket-policy

--bucket <Bucket_Name>

--policy file://sample-policy.json

Conclusion

Securing your cloud storage, particularly across platforms like AWS S3, is fundamental for safeguarding sensitive data and ensuring compliance with regulations. By adhering to best practices, you can effectively mitigate risks and protect your organisation’s valuable assets.

Encryption stands as a cornerstone in data protection, whether data is at rest or in transit. Leveraging server-side encryption with keys managed by the cloud provider adds an additional layer of security, ensuring that even in the event of unauthorised access, the data remains encrypted and inaccessible.

Moreover, it is imperative to restrict public access to storage resources. Regularly auditing and removing any public access permissions in AWS S3 is essential to prevent inadvertent exposure of sensitive information to the internet.

Additionally, managing cross-account access is crucial, particularly in scenarios where multiple teams or external entities require access to the same storage resources.

In summary, securing cloud storage involves:

- Implementing encryption for data at rest and in transit.

- Removing public access permissions to prevent unauthorised exposure.

- Managing cross-account access through robust IAM policies.

By prioritising these multi cloud security measures, businesses can confidently leverage the scalability and flexibility of cloud storage while minimising the associated risks.