Using Agentic LLMs in Penetration Testing: Practical Value, Risks and Responsible Use

Overview:

Agentic Large Language Models (LLMs) represent an evolution in how AI can support cybersecurity operations. Rather than simply responding to prompts, these models can plan tasks, call tools, interpret outputs and adjust their actions. In penetration testing, this means they can help automate routine work, organize assessments and provide faster analytical support. At the same time, their ability to act autonomously requires careful control, oversight and clear ethical boundaries.

This article outlines how agentic LLMs can be applied in pentesting, the advantages they bring and the safeguards necessary to use them responsibly.

Understanding Agentic LLMs

Traditional LLMs work in single-turn interactions. You provide input, the model generates output. Agentic LLMs extend this capability.

They are designed to:

- Break down a high-level objective into smaller steps

- Use external tools such as scanners, APIs and scripts

- Evaluate the results of these tools

- Continue iterating without needing new prompts each time

Agentic LLMs for Penetration Testing

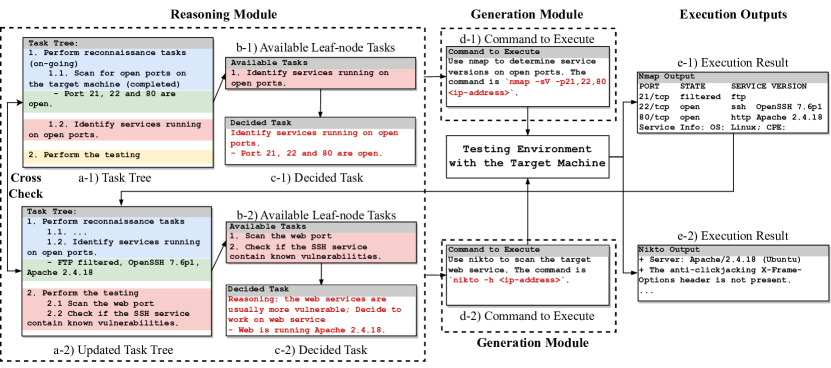

- Reasoning Module

- The agent thinks and plans its actions.

- It builds a task plan (like a “to-do” list) and updates it as it discovers new information.

- Helps decide what to test next based on earlier findings.

- Generation Module

- Once a task is chosen, the agent figures out how to perform it.

- It formulates the right actions or tests to gather meaningful results.

- Converts reasoning into executable steps.

- Execution & Feedback

- The agent runs the tasks and collects the results.

- Outputs are analyzed and sent back to the reasoning phase.

- This creates a continuous learning loop, improving the testing process over time.

Why Pentesters Are Exploring Agentic Models

Pentesting requires a balance of repetitive workflow steps and deep analytical reasoning. Agentic LLMs fit naturally into the repetitive and organizational side of this process.

They can accelerate reconnaissance by gathering publicly available information across a wide range of sources and organizing it into a more usable structure. They help maintain consistency in methodology by applying the same checks and logic across different targets. They can also serve as knowledgeable reference systems, assisting with documentation lookup, vulnerability descriptions, and standards mappings.

Another major benefit is reporting. Report writing is one of the most time-consuming parts of penetration testing. Agentic LLMs can summarize findings, write descriptions, outline impact scenarios, and prepare remediation recommendations in a clear and structured format. This allows human testers to spend more of their time on deep technical investigation rather than documentation.

Responsible Boundaries and Legal Considerations

The same qualities that make agentic LLMs useful also make them potentially risky if not used carefully. Penetration testing must always be conducted within a legally authorized scope. The autonomy of agentic models introduces new concerns about accidental or unintended interactions.

Clear, written authorization is essential before using these models in any testing workflow. The scope must be specific and technically enforced. Human oversight must remain central. Any action that changes system state, attempts exploitation, affects user accounts, or could impact service availability should require explicit human approval.

Additionally, every step performed by the model must be logged and reviewable. This protects both the testing team and the client, ensuring accountability and transparency.

Appropriate and Safe Use-Cases

There are several areas where agentic LLMs provide value with relatively low risk:

- Organizing and enriching OSINT data during reconnaissance

- Reviewing and summarizing the output from existing security tools

- Drafting penetration testing reports and documentation

- Supporting internal practice labs, training exercises and attack simulations

- Validating that temporary test artifacts were removed after an engagement

Activities That Must Remain Human-Controlled

Certain parts of penetration testing require judgment, ethical consideration, and an understanding of real-world consequences. Agentic LLMs should not autonomously:

- Exploit vulnerabilities in a live system

- Attempt privilege escalation or lateral movement

- Perform account takeover or credential attacks

- Conduct phishing or social engineering engagements

- Interact with production environments without direct approval

Technical Safeguards for Safe Deployment

To use agentic models responsibly, organizations should adopt several technical controls. Agents should operate in isolated environments with limited network permissions. Only approved tools should be available to them. Any action that could have material impact must require manual review. Activity should be logged immutably for later analysis. There should also be a clearly defined response plan if the model attempts to take unintended actions.

These layers of control are not optional; they are foundational.

Adoption Strategy

A mature approach is to introduce agentic LLMs gradually. Begin with supporting roles such as report generation and reconnaissance analysis. Once the team is comfortable with how the system behaves, incorporate supervised automation in controlled lab environments. Over time, the workflow can expand, but always with strong oversight, clear policies and continuous monitoring.

Conclusion

Agentic LLMs offer meaningful advantages for penetration testing when used thoughtfully. They can improve efficiency, consistency and documentation quality, allowing human testers to focus on deeper analysis and creative problem-solving. However, their deployment requires clear legal authorization, human oversight, technical controls and a strong understanding of ethical responsibility.

These systems work best as assistants, not autonomous operators. When we treat them as tools that extend human capability not replace it, we gain the benefit while maintaining safety and accountability.